Robots have long thrived in tightly controlled environments like factory assembly lines, but they still struggle in the messy, variable settings where people live and work. Microsoft Research is positioning a new approach, which it calls “Physical AI,” the application of agentic AI to physical systems, as the next step toward robots that can perceive, reason, and act more autonomously alongside humans in less structured spaces.

At the center of that push is Rho-alpha (ρα), Microsoft Research’s first robotics model derived from Microsoft’s Phi series of vision language models. The organization says the model is designed to translate natural-language instructions into control signals for robotic systems, with an initial focus on bimanual manipulation tasks that require coordinated two-handed action, such as interacting with switches, wires, knobs, sliders, and plug-and-socket assemblies.

Microsoft Research describes Rho-alpha as a “VLA+” model, emphasizing that it extends beyond traditional vision-language-action systems by incorporating additional sensing and learning capabilities. On the perception side, the model adds tactile sensing, and the team says work is underway to accommodate other modalities, such as force. On the learning side, Microsoft Research says it is working to enable Rho-alpha to continue improving during deployment by learning from user feedback, a capability intended to help robots adapt to dynamic situations and human preferences over time.

To demonstrate the model’s capabilities, Microsoft Research highlighted Rho-alpha interacting with BusyBox, a physical interaction benchmark recently introduced by the lab, where robots perform a variety of manipulation actions guided by natural language prompts. The team also described additional experiments using a tactile sensor-equipped dual-arm setup to perform tasks such as plug insertion and toolbox packing. In one example, the system struggled to complete a plug insertion and relied on real-time human guidance to recover and finish the task, underscoring the importance of human-in-the-loop correction methods as these systems move toward broader deployment.

Data availability remains a central constraint in robotics, especially at the scale needed to train foundation models that can generalize across tasks and environments. Microsoft Research says simulation plays a major role in its strategy to address the shortage of pre-training scale robotics data, particularly datasets that include tactile feedback and other less common sensing modalities. The team’s training pipeline uses a multistage reinforcement learning process with NVIDIA Isaac Sim, combining synthetic trajectories with commercial and openly available physical demonstration datasets. Microsoft Research says Rho-alpha’s tactile-aware behaviors are produced through co-training on physical and simulated trajectories alongside web-scale visual question answering data, and it plans to extend the same blueprint to additional sensing modalities and a broader range of real-world tasks.

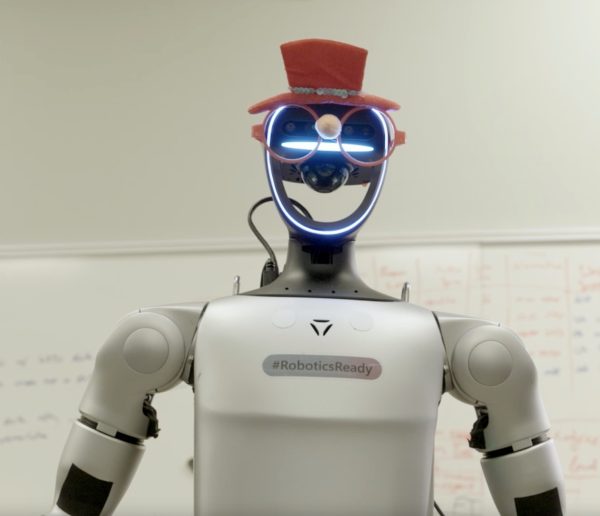

Microsoft Research says Rho-alpha is currently under evaluation on dual-arm setups and humanoid robots, and it plans to publish a technical description in the coming months. The organization is also opening an on-ramp for outside stakeholders, inviting robotics manufacturers, integrators, and end users to express interest in the Rho-alpha Research Early Access Program to evaluate the model for specific robots and scenarios. Microsoft Research also said Rho-alpha will be made available via Microsoft Foundry at a later date, signaling a path from research access to broader tooling and deployment workflows.

KEY QUOTES

“The emergence of vision-language-action (VLA) models for physical systems is enabling systems to perceive, reason, and act with increasing autonomy alongside humans in environments that are far less structured.”

Ashley Llorens, Corporate Vice President and Managing Director, Microsoft Research Accelerator

“While generating training data by teleoperating robotic systems has become a standard practice, there are many settings where teleoperation is impractical or impossible. We are working with Microsoft Research to enrich pre-training datasets collected from physical robots with diverse synthetic demonstrations using a combination of simulation and reinforcement learning.”

Professor Abhishek Gupta, Assistant Professor, University of Washington

“Training foundation models that can reason and act requires overcoming the scarcity of diverse, real-world data. By leveraging NVIDIA Isaac Sim on Azure to generate physically accurate synthetic datasets, Microsoft Research is accelerating the development of versatile models like Rho-alpha that can master complex manipulation tasks.”

Deepu Talla, Vice President of Robotics and Edge AI, NVIDIA